Can we convincingly test a IIS application before we publish it?

Bottom Line: Yes. Though a lot depends on creating realistic tests.

Other issues we addressed include: Can they be managed? Can they be remotely deployed? What are good business models? Handling exceptions. Roles in the organisation and making them happen. How to get server resources. Good software design.

Prerequisites: This material is for a specialised audience, which:

- Is interested in deploying IIS applications which use Visual Basic version 6

- Understands the basics of web publishing (file upload ftp and FP)

- Has familiarity with the Windows 32 operating system/s

- Has basic familiarity with IIS (or it's near relatives like PWS)

Performance Testing a IIS application

For more detailed write up go here.

For more detailed write up go here.

The recent introduction of IIS applications holds the promise of being able to easily create smart web sites. The technology looks exciting, but we have been disappointed before. So decided to check whether it could be used as required.

The approach is answer the question by doing it. This used a pathfinder project which:

- Compared HTML, ASP and IIS-applications served from IIS 4. This involved creating a very similar web site in the three formats. The site has frames, a few gif buttons, some graphics and 7 content pages. A fourth version used ASP to directly access the component behind the WebClass from ASP, this is not presented here.

- The tag replacement features of IIS-Applications were of special interest.

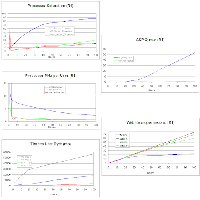

- Tests monitored different numbers of users and recorded a few key performance metrics. User loads varied from 1 to 100 simultaneous users.

- Most tests were performed over a LAN to avoid bottlenecking in the data stream. (Other tests were performed over a dial up connection to a server on the Internet.)

- The test illustrated here involved simulated users who accessed most pages on the web site. What the user does is derived from server logs. The users had short delays between page accesses of between 4 and 9 seconds. This is a rather harsh test and imitates the behaviour of experienced users. (Novice users are expected to load the server less.)

- Test conducted using the Microsoft Homer web stress testing tool. (This tool has been succeeeded by others since this work was done!!)

- This test exclusively used data already in memory, it did not access the database nor seek to force exceptions. In other words the features that use persistent storage are not reported here.

- Server logging was disabled. (Logging to a flat file was switched on for a test within a test. When logs get large on the same web server machine many processor cycles are eaten!)

- Mean values are used, over 100 data points per run. Means obscure the detailed dynamic behaviour but seem adequate. Detailed dynamic behaviour was monitored for many of the tests but is not presented here.

- Tests were run on a 266MHz HP single processor server, running NT 4 and IIS 4 in a clean installation. (Service pack 4 was installed as were various components such as Windows Scripting Host (WSH) with version 5 scripting engines.)

- Process state was examined in some detail but has no direct bearing on this analysis.

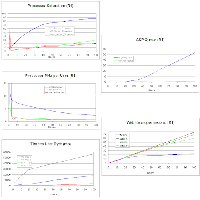

Various analyses were run on the data obtained. These are illustrated below. Pretty as these may be they need some extra expert work to answer the question. We used our expert system and rating experience to yield a simpler answer.

That simpler answer is a performance rating against number of users. We created a measure that ranges from 10 to -10 where:

- 10 is great

- 5 is acceptable

- on passing zero performance goes unacceptable

- at -10 performance is terrible

Among the contributors to this measure are user response time (TTLB - time to last byte), processor load per user (MHz per user) and a comparison against an ideal server. This simple measure emulated the analysis derived from a close examination of the contributing factors very well.

Conclusion

This finding is good enough to persuade us to move on. Improvement will be easy as the technique can be revved up with in-house skills.

Bonus Finding

The results appear to show that on the target 266 MHz machine and with limited functionality we can support 18 "hard users" simultaneously and 36 at a stretch. For persistent storage further analysis is needed.

Thanks to:

- DataSoft for hosting the test machine

- Creative Solutions for the server

- The following for various contributions: John Timney, Mike Russel, Richard Borrie, David Miles, Mike Kiss, Rod Drury, Dave Thompson, Matt Odhner and the Homer Team.

For more detailed write up go here.

For more detailed write up go here.